In October 2012, HBR predicted that Data Science will be the sexiest job in the 21st century. For the first 10 years of the century it did seem to be exactly matching.

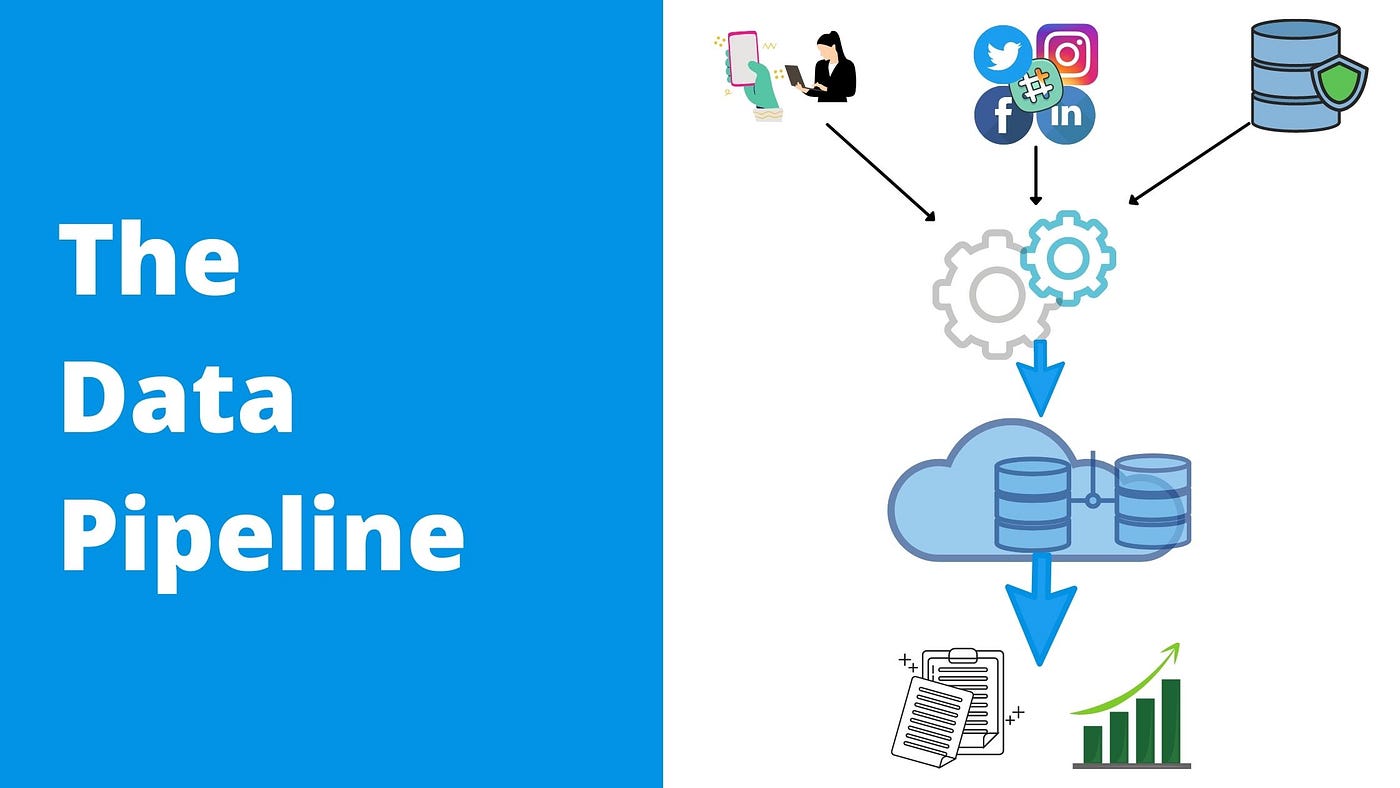

However companies soon realized that without proper data infrastructure in place and without quality data, data science projects were bound to fail. This resulted in astronomical demand for people who could fix these issues, AKA Data Engineers (DE in short).

So, you are here because you want to become a Data Engineer, but why? Let me answer that first.

Why Become a “Data Engineer” in 2022?

So, supply for quality data engineers are extremely low at the moment and demand is astronomical. And as normal economics will tell you when supply can not match the demand the prices are bound to go up.

“With great demand comes great rewards”

As per Glassdoor, Ambitionbox and Payscale the average Data Engineer Salary in India is 8–9lakhs per annum. However the salary can range from 3–4lakhs for freshers to upwards of 30lakhs for people with 10+ years of experience.

More people are even considering moving away from other data roles to a Data Engineer role. Its a great move, even if you are not into data field.

Great, now that we have addressed the WHY, let us go deep into HOW?

What are the skills needed to become a Data Engineer?

Just like Data Science or Full Stack Developer roles, Data Engineering role is also multi disciplinary. You need to learn a lot of dependent topics before becoming a great Data Engineer.

However, not everything is needed just to start or break into the role.

Note to beginners

Beginners shouldn’t feel overwhelmed by the huge set of tools and topics needed to learn.

There are several stages of learning involved, and as a beginner you should only concentrate on perfecting the fundamentals.

Once you feel comfortable you can move into the advanced topics with time and experience you will feel at home.

As I have noted above I will divide the complete set of skills and subjects into Fundamentals, Advanced topics & Good To Have .

Fundamentals

The Base is the most important part of any building, and its here that any construction starts. Hence, its important to build it better.

Its easy to get distracted. However its important that 3–4 months are spent building the fundamentals.

Once this part is mastered the next phase of learning will be much easier.

Below are the fundamental topics to cover, in no specific order of sequence.

- Database Concepts:

Basic Database concepts, normalization, keys, constraints, database storage etc.

2. Programming

Basic syntaxes, working with files, connecting to databases, building basic APIs, working with structured (database and tables)and unstructured(xml,json etc.) data.

3. SQL

Basic data extraction, joining tables, keys and constraints, window functions, aggregate functions etc. Data Definition and Data Modification queries.

c. Scenario based Hands on SQL series from Mentorskool.

4. Data Warehouse and Data Modelling

Basic Data Warehouse Concepts, Data Modelling for Data Warehouse, Star-Snowflake schema, Facts and Dimension tables etc.

5. Cloud Fundamentals

Learn about basics of Cloud computing, SAAS, PAAS, IAAS offerings, distributed computing, Capex vs Op-ex, Elastic scalability, Storage and Compute in the cloud, Data Stacks in cloud.

6. Hadoop Eco-System & Spark

History of Hadoop, Hadoop 1,2,3 , HDFS, MapReduce, YARN, Sqoop, Hive, PIG, HBase, Oozie, zookeeper, SPARK basics

Basic MapReduce programming with Python / Java

1st End2ERnd project: At this point you have all the required skills to create your first basic DE project. Concentrate on the below as you build it:

a. Scrape or collect free data from web.

b. Convert the data into csv / json and read the data using Python

c. Analyze and Cleanse the data using Python

d. Load the data into a Warehouse / DB server.

You can not miss the Zoomcamp series. Hands down the best free course on Data Engineering, I have found.

Advanced topics

- ETL using Python / Scala in Spark

Creating ETL code in Python / Scala, PySpark, Spark SQL, Spark Context, Spark Jobs, Spark submit, Optimizing Spark Jobs.

2. Data Processing Libraries / Constructs

RDDs, Data Sets, DataFrame etc. , Numpy, Pandas

Different file type ( CSV, JSON, AVRO, Protocol Buffers, Parquet, and ORC.)

3. NOSQL Db

Pick any ( Casandra / MongoDB) \ Graph DB is rarely needed, but good to have.

4. Workflow Management and Schedulers

This is a very important component in the modern Data Stack. Pick between AirFlow (most preferred and market leader) or anything else (Luigi, Prefect)

5. Data Streaming

Data Velocity is one of the key parameters for Big Data and Data Engineering.

We all want real time analysis and feedback on what’s working and what not, Reverse ETL and real-time analytics have become a must have in new business.

Apache Kafka, Storm, Flinks. Spark Streaming.

Creating a streaming data pipeline

6. DE in cloud (AWS / GCP / Azure)

Cover the complete Data Engineering lifecycle in any of the major cloud providers. Complete either one from 1–3 below and complete point 4. As Data offerings of Azure / Google / AWS are conceptually not very different and one can easily pickup the other, once they are comfortable with one.

EX:

- Azure Stack: Azure Data Lake, Azure Synapse, Azure Data Factory, Azure Cosmos DB, Azure Event Hub, Power BI.

Refer this course by Ramesh Retnaswami on Data Factory And also on Spark and Databricks here.

- Google Stack: Big Query, Pub-Sub, Dataflow, Dataproc, Looker

- AWS Stack: AWS S3, AWS Kinesis, AWS Glue, Redshift, AWS Athena, Lambda, AWS RDS

- Cloud Data Warehouses / Lakes: Databricks, Snowflake

2nd End2End project: The second project should cover more hands on knowledge and should be built more like a real time project.

Good To Have

- Dashboarding Tools : In depth knowledge of any specific Dashboarding tool is not a must have for a Data Engineering role. However it is extremely critical and good to have. Dashboarding can really help identify potential Data Quality issues, impact of bad data and can really save a lot of time for developers.

Power BI / Tableu / Looker are the primary players in the segment.

2. Docker : Docker helps to keep the infrastructure related complexity away. This helps to independently and easily setup a Data environment.

3. Devops / Data Ops

4. Modern Data Stack : Modern Data Stack refers to a set of independent mostly open source toolset. These tools provides flexibility to business . Even SMBs and Start up can now easily setup a modern Data Architecture, without worrying for vendor lock-in and high licensing costs.

Its good to have an understanding of the different tools in the stack and how do they fit in the whole DE roadmap.

Fivetran for ETL, Airflow for orchestration, Any cloud warehouse / lake, DBT for Data Transformation, Hightouch for reverse ETL, Monte Carlo for Data Observability etc.

Final Project : Now that all the important lessons are done, its important to use the learnings and creating an end to end pipeline as a Capstone Project. The important topics to be incorporated are :

- Building containers to run the ETL/ELT pipelines

- Creating pipeline with python to Load data in lake.

- Creating orchestration to run the codes

- Running jobs on Spark, Batch and Stream processing

- Data Modelling for Warehouse

- Loading data from the lake to the warehouse

- Transforming data in the warehouse and prepare for dashboard.

- Data visualization and building the dashboard.

- Documentation

Conclusion

We might not need each of these skills in the day to day job as a Data Engineer. However you might need one or many of these frequently based on the role.

Learning most of these well will take time. So, keep learning every day. Compounded learning will ensure that with time you get better. There is no shortcut, so don’t believe people who claim to make a Data Engineer in one or two months.

Stay Up To Date:

Are you already someone like me, have been working in the industry as GUI based ETL developer or Data Modeler? or even a code based Data Engineer?

The only secret to stay relevant is to stay updated and up-to-date about all the changes happening in the industry. Follow Data Leaders on LinkedIn, read about blogs and news letters. And most important keep learning everyday.

0 Comments